The state of artificial intelligence, cognitive systems and consumer AI. Part I.

Update: Part II coming soon (2024), including a Primer on Artificial Cognitive Systems and hardware implementations.

Here’s a prediction: The next major development shift in technology, particularly consumer technology, will be artificial intelligence (AI). More specifically, AI seamlessly integrated into most consumer electronics gadgets, such as personal computers, mobile devices, game consoles, and even household appliances. The key to such technology is to capitalize on human learning and a collective memory that form human thought and “personality.”

| “The only way of discovering the limits of the possible is to venture a little way past them into the impossible.”

–Arthur C. Clarke, Hazards of Prophecy: The Failure of Imagination, in Profiles of the Future (1962) “Our imagination is stretched to the utmost, not, as in fiction, to imagine things which are not really there, but just to comprehend those things which are there.” –Richard P. Feynman (1965) |

What does it take to realize consumer-friendly AI technology? The answer varies, depending on the functionality, as one might expect. AI systems that implement machine learning, planning, information extraction, decision making, and image, speech and language processing/recognition in various architectures (including distributed processing such as neural networking algorithms) that execute specific intelligent tasks have been around for years, but even those systems have not permeated the consumer technology world as much as some of us would have imagined. Robotics, perhaps the most identified AI application, has given us the Roomba (autonomous vacuum cleaner). Siri, a virtual personal assistant developed by SRI Intl/DARPA and a recent Apple software adaptation that runs on the latest iPhones, might be what many consider among the first serious steps in the consumer AI direction, but it has many limitations (more on that later in this series). Thusly, the dream of the “AI Companion” is probably a bit farther off in realization.

| For Parts II-IV of this series, plus other reviews and primers, Readers can visit a separate, dedicated site on Artificial Intelligence and Artificial Cognitive Systems HERE. |

Imagine an artificial system that intelligently learns from your actions, your other human conversations, responding to your half-certain queries with relevant answers or suggestions, fluidly providing companion-like responses and conversations, or solving problems you’ve only begun to formulate. Imagine also that such a system appears to have complex perception and thought, including a fluid understanding of a conversation or of your well-being, a sense of humor and a gift of metaphor, or a creative approach to a hard problem. Those of us immersed in the science fiction world have for years read about such constructs, dreaming about the maturity of AI technology as a range from a seamless intelligent learning system for the casual user to an interactive system that can pass a Turing Test, and that is indistinguishable from another thinking, breathing human (what I term the “AI Companion”).

Why haven’t we made much progress on bringing the wonders of AI (cognitive systems included) to the masses? What are the limitations and can we define them? Is there some grand challenge that we all ought to identify with?

Significant discoveries and technical advances are being made in neuroscience, biomedical engineering and neuroprosthetics that will enable us to understand at a fundamental level how the brain processes sensory information (neural encoding), stores and recalls knowledge (memory and learning), handles highly abstract concepts and thought, and enables complex motor control. My prediction is that these advances will have the most impact on AI.

More specifically, I predict that understanding the fundamentals of neuroplasticity and synaptic plasticity (the brain’s ability to structurally – anatomically and functionally – change as a result of programming and learning, from cellular changes to cortical remapping) will be key, and that such self-replication and self-reconfiguration features will be required for novel technologies implementing more general AI and “cognitive systems.”

In the shorter term, the consumer might expect that current technologies, such as application specific integrated circuits (ASICs), reconfigurable computing architectures like field programmable gate arrays (FPGAs), and even more specialized neuromorphic chips, along with neocortically-inspired algorithms, may suffice to provide limited “AI on a chip” tasks, akin to the goal-directed intelligence of intelligent agents, expanded natural language and speech capabilities, and neuroprosthetics (brain-machine interfaces included).

The goal of this essay and associated reviews is to concisely communicate the latest advances in AI, how they might translate to the human consumer world, and what they portend for the future in terms of the development of an “AI Companion” that is both intelligent and can think. Let me first review a bit of AI histrionics.

(Reminder to the Reader: Active links are shown in this COLOR. Mouse over the link and click.)

1. Are Penrose and Kurzweil both crazy?

Roger Penrose in his book “The Emperor’s New Mind,”[1] argues that machines can never sport human intelligence and pass a true Turing Test, due to their algorithmically deterministic nature. He conjectures that human consciousness is “not ‘accidentally’ conjured up by a complicated computation,” that an algorithmically based system of logic is insufficient to simulate human intelligence, and that human thought may even be a physical paradox indescribable by “present conventional space-time descriptions.” Penrose stands at odds with AI proponents who believe that human intelligence and cognitive ability can be reproduced through algorithmic simulation on a ‘sufficient’ computing architecture.

Contrast Penrose with Ray Kurzweil, author of “The age of spiritual machines: when computers exceed human intelligence.”[2] Kurzweil provides a convincing debate against Penrose’s thesis, in that if human intelligence and thought (consciousness) is formed on the basis of quantum decoherence from an inherently undisturbed evolution of quantum coherences that comprise human brain function, that there is no physical or technological barrier to reproducing such a ‘system’ on a machine. However, basic quantum computing itself appears to be a grand challenge [3].

Are Penrose and Kurzweil both making AI into an eccentric endeavor that the average human consumer might never experience?

2. Strong AI vs. Weak AI

It is useful to distinguish between two forms of AI challenges: strong AI and weak AI. Strong AI is AI that displays human intelligence and can pass a Turing Test. Such an AI system can perform any intellectual task of a human and can display human thought. The key here though is human thought – consciousness, understanding, feeling, self-awareness and sentience. Philosopher John Searle popularized the strong AI hypothesis when he proposed the “Chinese Room Argument,” which states that an AI program cannot give a computer a “mind” or “understanding”, regardless of how intelligently it may make it behave. Searle asked the question: does the machine truly “understand” (“think”), or is it merely simulating the ability to understand (which would not be classified as equivalent to human thought)? Weak AI, sometimes termed “applied AI,” is AI that accomplishes specific problem solving or reasoning tasks that do not encompass the full range of human cognitive abilities, namely the literal display of human thought. As the reader might guess, many current and past AI programs and projects fit the weak AI strain, including expert systems and personal AI assistants. The Holy Grail and grand challenge of a cognitive strong AI system remains elusive.

Searle might appear to be just another pessimist, like Penrose, but he really isn’t – he never stated that a strong AI system with human consciousness cannot be designed and built – he simply added more rigors to the challenge. However, one (namely, I) might ask the following question: if an AI system can simulate human thought and pass a Turing Test, then who cares?

3. Minsky brings sanity to the problem, with a dose of skepticism

Marvin Minsky, a well-known strong AI proponent and researcher, framed the AI challenge succinctly in a short essay almost two decades ago [4]. In particular, Minsky outlines that a versatile AI will need a common sense knowledge base and multiple methods to reason and communicate. Rather than placing the AI challenge in the stratosphere, Minsky’s “theory matrix” of AI functionality implies that functionality vs. tractability is the metric to work toward, a metric that will improve with the evolution of technologies over time.

On the other hand, Minsky called the Loebner Prize, an annual competition for AI systems passing practical Turing Tests, a publicity stunt, and he may be correct, but the bottom line is that we need some competitive forum for the “strong AI,” or otherwise we will just keep on churning out prototypes like Watson, Eliza and Rosette (the latter two being gifted chatterbot s) that cannot think.

4. What is human “thought” and why is it so elusive?

Now that I’ve used the terms “thought” or “think” in addition to “intelligence” in describing what properties an AI might have in the future, I think I should explain why “thought” is so much harder than “intelligence” to capture and implement in the artificial domain. A major part of the answer is that we simply don’t fully understand human thought and cognition, either from the strict human neural architecture point of view, or from the plethora of artificial models that have been constructed for decades by neuroscientists, psychologists and computer scientists (and even philosophers). As Richard Feynman so aptly pointed out: “What I cannot create, I do not understand.” (I might add the term [re]create.)

Human consciousness, which maps into the idea of self-awareness, is a related concept that has the same quality of the unknown as human thought. One might think of human thought as encompassing consciousness, sub-consciousness and intelligence, but also a very relevant property, perception, which has to do with organizing and interpreting sensory information.

As the intellectual debates over “what is consciousness” rage in the ever-present form of popular bestsellers (I list a few of the better ones in [5]), some in the AI field have asked what the point would be to recreate human thought, particularly consciousness and perceptive qualia (defined as subjective sensations) in an AI, instead making the plea that we focus on taking the salient human intelligence features of the neocortex and using them to construct intelligent machines. Both Marvin Minsky and Jeff Hawkins [6] have argued this idea persuasively, and they come at it from a functional point-of-view: incorporating capability features that have remained quite challenging to AI designers, such as machine vision and natural speech and language recognition and generation. Hawkins in particular has concentrated on the reductionist idea that thoughts are predictions tied to the massive memory capacity of the neocortex, and when combined with sensory input, are our perceptions. This forms the basis for his memory-prediction framework of intelligence, which has evolved into a more formal hierarchical temporal memory algorithm for how the neocortex processes information: hierarchically and with ubiquitous temporal feedback. (Never mind that humans can be biased predictors, as pointed out by Daniel Kahneman [7].)

However, I think it is short-sighted to ignore the broader concept of human thought and whether we can incorporate features of it into an AI to enhance its appeal in terms of human utility and value, beyond “human intelligence” or predictive capability. Stated another way, there is a strong possibility that what we call “human intelligence” is inseparable from the broader features of “human thought” that aren’t always classifiable as simple predictions. A few prime examples include our fluid and effortless ability to understand natural speech and language, even the more nuanced features; our ability to make connections between disparate concepts or events, many times through subjective routes; our ability to form and manipulate abstractions; our creative and imaginative capabilities; our sense of humor or metaphor; our ability to effortlessly perform analogical reasoning (form analogies), to name a few.

Indeed, from a neurological perspective, the “binding problem” – how the brain unifies sensory representations with higher-level “invariant representations [8]” learned and stored in memory to form intelligent perception and thought – is by far an unsolved problem. Unlike Penrose et al., I don’t share the view that the problem is intractable or that it requires yet unknown obscure theories or a new kind of science. I am in the camp that all that is “human thought” might be an emergence of the “critical mass” density of dynamic and plastic synaptic mapping and neuronal activation. The evidence mounts that there is dynamic cross-domain mapping and activation between processed sensory information (sound, vision, tactile…), short and long-term memory stores, speech and language centers, and motor control areas. Collective memory and learning (in addition to memory and predictive capability) are key elements. The goal is to understand and harness this dynamic mapping and activation/collective memory and learning complexity and to apply it to AI.

Sidebar 1: Voices from the Neuroscience Community, Take 1

I will ignore the philosophical discussion of what it would mean to create a “self-aware” AI that could possess qualities of a malevolent nature – my aim is to focus on qualities that enhance, not detract, and it seems to me that an AI that is “aware” of the malevolent side of human thought might itself have value. Morality, empathy, ethics and free will are all a part of human nature, and for an AI to grasp those concepts would be useful.

A final conundrum on this topic is whether a Turing Test is sufficient to gauge human-like qualities of an AI. This would presume that the test includes all ranges of human thought, and negates spurious outcomes. We certainly wouldn’t want an AI that passes but a human that fails – a case where the AI fooled the tester, who poorly designed the test. Philip K. Dick’s literary play on this in “Do Androids Dream of Electric Sheep” [9] is not forgotten.

5. AI and the “Grand Challenges”

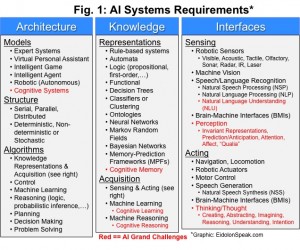

In defining AI systems, I find it helpful to view the scope via an “AI systems requirements” matrix, such as the one shown in Fig. 1. Architecture (models, structure, algorithms), knowledge (representations, acquisition) and interfaces (sensory or action-based) are all integral to any advanced AI.

Of the model systems listed on the left (expert systems, virtual personal assistants, intelligent games, intelligent agents, robotics (autonomous)), I review several state-of-the-art and recent consumer-based implementations in detail, specifically those shown in bold in Fig. 2. Those reviews are discussed below. Machine learning, knowledge representations & acquisition, machine vision and natural language processing (NLP) are four major elements of any potential AI system that will communicate and interact with a human, and I provide primers on these on a separate site area dedicated to AI (Click HERE for link to the AI site and primers).

The items in Fig. 1 that are bold red are AI grand challenges, and are particularly directed toward an “artificial cognitive system” or cognitive architecture implementing human cognitive ability, namely cognitive memory and learning, human-level natural language understanding (NLU), perception and thought.

As a convention, I apply the term “artificial cognitive system (ACS)” if the goal were to produce a strong AI with human cognitive abilities, or simply keep the term “AI” for systems implementing the weaker AI attributes (the majority of the requirements in Fig. 1).

Sidebar 2: Voices from the AI/CSE Community

6. AI reviewed: From the expert/superhuman/humanoid to the consumer/gamer/patient

In Fig. 2, I provide a list of AI model systems, descriptions and recent projects, classified by applied capability; those that are reviewed in detail are shown in bold.

My approach is interdisciplinary and broadened, in that the scope of the projects reviewed transcend traditional computer science/engineering and robotics (where AI has found its home since the beginnings) and extend into the consumer, gamer and biomedical space, as well as computational neuroscience and neuromorphic engineering. My aim is to understand and communicate recent advances in both large-scale and consumer-driven applications, any innovative crosscurrents that would drive large-scale capability down to the consumer level, and major insights or breakthroughs that might lead to a truly cognitive AI.

Each applied area and its associated programs can be found in Parts II-IV of this series, found at these links:

• “The State of AI: Expert Systems, Intelligent Gaming and Databases, Virtual Personal Assistants”

• “The State of AI: Brain Simulations and Neuromorphic Engineering”

• “The State of AI: Intelligent Agents, Robotics and Brain-Machine Interfaces”

These reviews, along with primers and other material, are also accessible on separate site area dedicated to AI (Click HERE for link to the AI site – reviews, primers, surveys, extended material).

All of the programs I review are known in the public domain; there may be proprietary or classified programs that exist, which are of course not covered. I invite readers who want to expand on my reviews and primers to submit letters or whole articles, and I will consider publishing them on the dedicated AI page. My goal is to make the AI theme a continued essay, review/survey and primer effort as technologies advance, including revolutions in our knowledge base of the human neural architecture itself.

7. The epilogue of AI: Artificial Cognitive Systems

What is Project ACS? Project ACS is meant to be a placeholder for a project that leads to the first serious artificial cognitive system (“ACS” – I coined the term above), that when suitably evolved, can pass a Turing Test without fooling the human tester, and may even look and act like a human (android, humanoid), though looks are not the top requirement. What do I mean by “evolved?” Just like humans must learn and grow based on their genetic makeup and environmental factors (phenotype characteristics), so too would an artificial cognitive system. I don’t think the learning and development process can be avoided (this is an important point missed by many designers), and learned machine replication is certainly an open area of research.

Among the major unsolved neuroscience puzzles that are likely to benefit AI and the development of an ACS are (1) the resolution of the neural architecture to such a degree as to produce applicable and practical cognitive model(s) and knowledge representation(s), and (2) the resolution of the “binding problem” discussed above. Resolving these puzzles must go beyond the usual “task dependent” focus, into a more generalized view and framework (hence the categorization of an ACS as a “grand challenge;” by “task dependent” I mean a focus on visual processing or unimodal processing, for example). In the following link, I list a number of key considerations for artificial cognitive systems (representations and architectures), based on the reviews, surveys and expert viewpoints I have presented in this series.

Sidebar 3: Key Considerations for Artificial Cognitive Systems – Representations and Architectures

As for the binding problem, this unsolved controversy has yielded a span of views, from those of V. Ramachandran and others [5], who find evidence from neurologically impaired patients that binding is indeed a relevant dynamic, to those of M. Nicolelis [26], who argues that binding may be nonexistent (or not as “discretized” as some interpret), to those of Edelman et al. [5] who have proposed a plausible model solution to the problem. The binding problem may seem like a philosophical nuance (or nuisance) that has no bearing on the development of an ACS, but I disagree. At the heart of the controversy is whether the processing of sensory information (including predictions about the information being sensed to enable recognition) and “intrinsic thought” follow two separate neural processing pathways. (Examples include the recognition of a face, as opposed to “intrinsically” visualizing that face via thought alone, or the recognition of tonal sequences, as opposed to intrinsically playing a melody via thought, or the cross-modal processing of language involving abstract concepts.) Neuroscience data on this is so far indeterminate, as even recent studies involving invasive measuring techniques on epileptic patients indicate (see Part 4 for a few recent studies).

If the brain does separate these functions only to integrate them at some level of processing, then why, and how, is this accomplished? This question has a potentially major bearing on how the neural architecture is structured, so as to enable speech and language understanding as well as abstract perception and thought. Some have argued that one unified “endogenous” view would make sense from the perspective of the allocation of the brain’s energy resources [10], a valid approach. No doubt the answer to this puzzle, which involves the real matters of self-awareness and the capabilities and capacities for abstract perception and thought, will require many more neurological studies and data, and will in turn impact the development of viable models and representations. Edelman and his colleagues/students [5,27] assess the role of selectional theories in developing models of the brain (e.g. neural Darwinism ), defining testable predictions that would have an impact on the development of an ACS. More recently, the mapping efforts of Sporns et al. (see [27] and refs. therein) are on a productive track to produce the data and resolution necessary to test such theories and models.

Readers may note that I don’t have any other programs or projects listed in the category of “Cognitive Architectures” in Fig. 2, because as far as we publicly know, humankind has not yet developed such an ACS. In fact, no one has ever won the silver and gold levels of the Loebner Prize, which provides an annual platform for practical Turing Tests (see note, [5]).

(A visit to the Wiki site ‘comparison of cognitive architectures ’ will provide some basis as well – note all the incomplete fields – this is due to lack of progress in this area. Most, if not all, of the projects listed are driven by computer science/pure AI researchers.)

Why is there a lack of significant progress in the area of cognitive architectures and a “Project ACS?”

| “In this age of specialization men who thoroughly know one field are often incompetent to discuss another. The great problems of the relations between one and another aspect of human activity have for this reason been discussed less and less in public.” –Richard P. Feynman, 1956 |

My view is that the AI community is still quite cloistered, lacking a wide interdisciplinary approach, and a healthy level of cross-pollination in ideas, discoveries, discussion and debate. Yes, the computer science/engineering community has morphed over the years into branches such as computational neuroscience and neuromorphic engineering, and this migration has been productive, but not completely so. Why? Within those branches we still see silo-ing and tunnel vision, a persistent linkage to the old paradigms of von Neumann computing and semiconductor-based architectures, and still no major contribution to truly cognitive architectures. The grand challenge of neuroplasticity (including combined features such as autonomy, self-replication and self-reconfiguration, and fault tolerance) is not being tackled enough.

(To be fair, there is a recent movement “biologically-inspired cognitive architectures (BICA)” that was spawned in part by a DARPA program of the same name. Though the program got cancelled in 2007 for being too “aggressive,” the movement still lives on in the form of a society of the same name, which organizes annual meetings and publishes progress updates HERE . After viewing some of the online videos of the recent Nov. 2011 meeting, my critical comments still apply, and I recommend that participants in this movement continue to reach out to key peripheral fields that will have a major impact in designing cognitive architectures.)

Fields like cognitive neuroscience (including neurology, psychiatry, psychology), neurobiology and biomedical engineering have made and are making major discoveries including:

• A systemic understanding of the neural architecture:

- From the neurobiological level in understanding the cellular (neuronal-synaptic) mechanisms in memory and learning

- From the connectome level in mapping synaptic connectivity of the mammalian and human brain, using novel and yet-to-be-developed imaging and invasive/noninvasive biomedical tools and sensors (this will be part of NIH’s “Human Connectome ” project, see also [11,27]; also visit the Open Connectome project, which provides some existing datasets); the connectome level can be split into cellular, macro and meso-scale levels

- From the biomedical level in developing practical neuroprosthetics that involve sensory and motor implants, including brain-machine interfaces (BMIs) and neuromorphic chips (more detail below)

- From the modular-functional level in charting out specific functional areas using various imaging tools such as fMRI (visit the Allen human brain atlas, a useful tool for visualization and data mining)

- From the psychiatric and psychological level in studying patients with neurological damage and associated psychometric data to gauge a deeper understanding of functional areas, memory and learning, language, perception and thought

• Practical progress in neuroprosthetics :

- Artificial vision implants/retinas based on vision research on the retina, optical nerve and visual cortex

- Auditory brainstem implants and cochlear implants based on audio-neural research on the cochlea, auditory nerve, inferior colliculus and auditory cortex

- Motor prosthetics or sensory-motor prosthetics that allow para- or quadriplegic, locked-in or ALS patients to regain conscious mobility based on sensory-motor-neural research on the spinal column, somatic nervous systems and motor cortex; such a prosthetic system includes brain-machine interfaces (BMIs) and neuromorphic chips developed by multidisciplinary teams reaching from biomedicine, neurology and cognitive neuroscience to bioengineering and neuromorphic engineering to materials science to electrical and mechanical engineering to robotics

- Cognitive prosthetics that may allow the restoration of cognitive function due to brain tissue loss from injury, disease or stroke, such as memory, cross-modal cognitive tasking and function, language and speech; such implants will require breakthroughs in materials science and engineering, in terms of replacing functionality from neural tissue loss that was originally plastic, dynamic and dense; this is the most speculative area of neuroprosthetics and in its infancy – and shares a great deal of potential coexistence with cognitive architectures in AI

The challenge is for the AI community at-large to tap into these discoveries and advances to draw more insights and ideas toward the goal of developing cognitive architectures or a successful Project ACS. In doing so, I think the task requires breaking out of the silo or tunnel vision and forming a concerted, interdisciplinary effort. The researchers involved in the progress made on neuroprosthetics and BMIs should serve as a useful role model.

Sidebar 4: Voices from the Neuroscience Community, Take 2

Finally, this essay started with a dream: it turns out to be mine. Do humans dream of an “AI Companion?” Yes, they do.

–

Author’s Note: I should add that after I wrote this essay I came across a relatively recent debate between Ray Kurzweil and Paul Allen, co-founder of Microsoft and benefactor of the Allen Institute for Brain Science [12]. (Click HERE to read the debate text.) I found the debate unproductive. The reason why we have not had significant progress in AI in terms of building a truly cognitive system is because of the prolonged lack of significant interdisciplinary effort between traditional AI and neuroscience and the vast range of disciplines in between, as argued above, as well as a lack of focus toward a common goal. My view is that all sides could and should be motivating one another to follow the same grand challenge, which I have called an “ACS.” What I see as an outsider (and I consider myself one, as I was not born into either field, but have a decent background in scientific research) is that both fields have wasted years debating largely philosophical issues (just visit your local library to view the plethora of books on consciousness and mind/brain deliberations – 30 or some odd years and 100s of tomes, written by AI and neuroscience luminaries alike), without committing to a grand challenge with a consistent, methodical path to get there. Likewise, the Allen/Greaves/Penrose “complexity brake” can be a blindsight hindrance, just as much as Kurzweil’s unfocused, misguided optimism. The singularity might not be near, but the commitment to a grand challenge sure as heck should be. QED

–

For more information, reviews, primers, surveys and thoughts, please visit separate site area dedicated to AI (Click HERE for link to the AI site). I also welcome constructive and friendly comments, suggestions and dialogue (click on contact link at top).

Disclaimer: The material presented here and on the dedicated AI page(s) are intended for understanding and informative purposes; any intelligent opinions or critical appraisals, separate from facts presented, are wholly those of eidolonspeak.com/individual authors (i.e., me). I encourage readers who find factual errors, or who have alternative intelligent opinions and appraisals, to contact me. Cheers.

–

References and Endnotes:

[1] “The Emperor’s New Mind,” Roger Penrose, 1989.

[2] “The Age of Spiritual Machines: When Computers Exceed Human Intelligence,” Ray Kurzweil, 1999. See also “The Singularity is Near,” 2005.

[4] “Future of AI Technology,” Marvin L. Minsky, 1992.

[5] “A Brief Tour of Human Consciousness,” V.S. Ramachandran, Pi Press, 2004.

“The Quest for Consciousness, A Neurobiological Approach” C. Koch, Roberts & Co, 2004.

“In Search of Memory, The Emergence of a New Science of Mind, E.R. Kandel, W.W. Norton & Co., 2007.

“A Universe of Consciousness: How Matter Becomes Imagination,” G.M. Edelman and G. Tononi, Perseus Books, 2000.

“Synaptic Self: How Our Brains Become Who We Are,” J. Ledoux, Penguin, 2003.

“From Axons to Identity: Neurological Explorations of the Nature of the Self,” T.E. Feinberg, Norton & Co., 2009.

See Also:

“The Problem of Consciousness,” F. Crick and C. Koch in “The Scientific American Book of the Brain,” ed. Antonio R. Damasio, 1999.

“Can Machines Be Conscious,” C. Koch and G. Tononi, IEEE Spectrum, June 2008.

(The last reference contains a relatively newer proposal for a Turing Test, based on comparing metrics from an “Integrated Information Theory” of consciousness to empirical/phenomenological test data of real test subjects. In my view, a battery of practical Turing Tests based on a number of viable theories or models is probably a sound approach, not any one test, derived from one theory or model. I also do not share the relatively pessimistic view held by the authors, whom I consider key contributors from the neuroscience community. Koch/Tononi also need to buy into that grand challenge I argue for, the central argument of this essay.)

[6] “On Intelligence,” Jeff Hawkins, Owl Books, 2004.

[7] “Thinking, Fast and Slow,” Daniel Kahneman, Farrar, Straus and Giroux, 2011.

[8] Hawkins in [6] uses the term “invariant representation” to refer to the brain’s internal high-level stored pattern representation of images, sounds or any sensory information that may be stimulating the central nervous system. He correlates this invariant representation with the stability of neural cell firing high up in the cortical hierarchy, as a response to sensed lower-level fluctuating stimuli, denoting that the cortex has rendered a recognition (prediction) of those stimuli (using the invariant representation), even when the stimuli are “noisy” or include distortions, transformations and fluctuations. Examples include quick and easy facial recognition under blurry or distorted conditions, or the seamless ability to recognize music in any key or a familiar rhythm embedded in a new song. This is a type of auto-associative recognition using invariant representations, but there can also be hetero-associative recognition as well.

Ramachandran in [5] uses the term “metarepresentation” to mean much the same thing, but also characterizes it as “a second ‘parasitic’ brain – or at least a set of processes – that has evolved in us humans to create a more economical description of the rather more automatic processes that are being carried out in the first brain…bear[ing] an uncanny resemblance to the homunculus that philosophers take so much delight in debunking…I suggest this homunculus is simply the metarepresentation itself, or another brain structure (or a set of novel new functions that involves a distributed network) that emerged later in evolution for creating metarepresentations, and that it is either unique to us humans or considerably more sophisticated than ‘chimpunculus.’ ” Ramachandran goes on to say that that this metarepresentation “serves to emphasize or highlight certain aspects of the first [sensed representation] in order to create tokens that facilitate novel styles of subsequent computation, either for internally juggling symbols (“thought”) or for communicating ideas (“language”)…the parts of the brain tentatively involved in these novel styles of computation include the amygdala (that gauges emotional significance), the angular gyrus and Wernicke’s area clustered around the left temporo-parietal-occipital (TPO) junction (language and semantics centers), and the angular cingulate, involved in ‘intention.’ ” Ramachandran focuses on the significance of the metarepresentation to the emergence of language comprehension/meaning capacity and self-awareness, and defines a few tests on patients with neurological damage to investigate the extent of a “representation of a representation.” One interesting case he cites is the ‘blindsight’ syndrome, in which a patient with visual cortex damage cannot consciously see a spot of light shown to him but is able to use an alternative spared brain pathway to guide his hand unerringly to reach out and touch the spot: “I would argue that this patient has a representation of the representation – and hence no qualia ‘to speak of.’” He also cites a converse case, Anton’s syndrome, a blind patient owing to cortical damage but denies that he is blind: “What he has, perhaps, is a spurious metarepresentation but no primary representation. Such a curious uncoupling or dissociations between sensation and conscious awareness of sensations are only possible because representations and metarepresentations occupy different brain loci and therefore can be damaged (or survive) independently of each other, at least in humans. (A monkey can develop a phantom limb but never Anton’s syndrome or hysterical paralysis.)” The important point is made that “just as we have metarepresentations of sensory representations and percepts, we also have metarepresentations of motor skills and commands…which are mainly mediated by the supramarginal gyrus of the left hemisphere (near the left temple). Damage to this structure causes a disorder called ideomotor apraxia. Sufferers are not paralyzed, but if asked to ‘pretend’ to hammer a nail into a table, they will make a fist and flail at the tabletop. The left supramarginal gyrus is required for conjuring up an internal image – an explicit metarepresentation – of the intention and the complex motor-visual-proprioceptive ‘loop’ required to carry it out. That the representation itself is not in the supramarginal gyrus is shown by the fact that if you actually give a patient a hammer and nail he will often execute the task effortlessly, presumably because with the real hammer and nail as ‘props’ he doesn’t need to conjure up the whole metarepresentation.” Ramachandran also discusses the role of the angular gyrus in the left hemisphere (located at the crossroads between the parietal lobe (touch and proprioception), the temporal lobe (hearing) and the occipital lobe (vision)) in “allowing the convergence of different sense modalities to create abstract, modality-free representations of things around us.” He argues from studies on patients that such cross-modal representations are highly linked to the ability of understanding and grasping metaphor in language – patients with lesions in the angular gyrus are unable to grasp cross-modal metaphors.

That the brain learns and stores “idealized” representations for images, sounds, symbols, language, touch, smell or motor skills/motion that are later used for recognition and other associative tasks (or even the stuff of subconscious dreams) is not new. I do not know who originally came up with the concept, but Crick & Koch in [5] also discuss a similar “explicit representation,” which they also call a “latent representation.” Koch discusses such representations and their invariant nature at length in his book [5], along with his general goal of identifying the “neuronal correlates of consciousness.” I consider Hawkins’ general concept of an “invariant representation” to be a powerful one, and his neocortical structure processing model implementing “invariant representation” learning and memory storage for later predictive use with sensory stimuli simple and elegant, but he apparently only applies it to auto-associative recognition tasks. It does appear increasingly evident that for the complex processes of visual awareness the neocortex plays a dominant role, and auto-association perhaps the dominant process for predictive recognition.

While I suspect that the “invariant representation” conceptual power and utility runs quite deep in terms of human thought (conscious or subconscious), memory, recall, recognition, perception, etc., including the hard problems of language understanding and abstraction, for the latter we likely need to apply hetero-associative and cross-modal processing. Ramachandran’s extended view of the structures producing metarepresentations and their interactions appears to go beyond simple neocortical structure processing. Deriving extended structure and processing models that capture the range of associative (and even non-associative) and cross-modal interactions is part of the grand challenge.

[9] “Do Androids Dream of Electric Sheep,” Philip K. Dick, 1968. This science fiction classic was the inspiration for Ridley Scott’s 1982 sci-fi flick, Blade Runner.

[10] “Two Views of Brain Function,” M.E. Raichle, Trends Cogn Sci vol. 14, p.180, 2010. This review is accessible HERE.

[12] “Paul Allen: The Singularity Isn’t Near,” P. Allen and M. Greaves, MIT Technology Review, Oct. 2011.

[13] “Recursive Distributed Representations,” J.B. Pollack, Artificial Intelligence, vol. 46 (1), p.77, Nov. 1990.

[14] “Implications of Recursive Distributed Representations,” J.B. Pollack, Advances in Neural Information Processing Systems I, 1989.

[15] “Neural Network Models for Language Acquisition: A Brief Survey,” J. Poveda and A. Vellido, Lecture Notes in Computer Science, vol. 4224, p. 1346, 2006.

[16] “Learning Multiple Layers of Representation,” G.E. Hinton, Trends in Cognitive Sciences, vol. 11, p.428, 2007.

[17] “What Kind of Graphical Model is the Brain?” G.E. Hinton, International Joint Conference on Artificial Intelligence, 2005.

[18] “Energy-Based Models in Document Recognition and Computer Vision,” Y. LeCun et al., Ninth Intl. Conf. on Document Analysis and Recognition, 2007.

[19] “Learning Deep Architectures for AI,” Y. Bengio, Foundations and Trends in Machine Learning, vol. 2 (1), 2009.

[20] “Modeling Neural Networks for Artificial Vision,” K. Fukushima, Neural Information Processing, vol. 10, 2006.

[21] “Temporal binding and neural correlates of sensory awareness,” A.K. Engle and W. Singer, Trends in Cognitive Sciences, vol. 5, 2001.

[22] O. Sporns, G. Tononi, G.M. Edelman, “Connectivity and complexity: the relationship between neuroanatomy and brain dynamics,” Neural Networks, vol. 13, 2000.

[23] “A Theory of Cortical Responses,” K. Friston, Phil. Trans. R. Soc. B, vol. 360, 2005.

[24] “Hierarchical Models in the Brain,” K. Friston, PLoS Computational Biology, vol. 4, 2008.

[25] “Towards a Mathematical Theory of Cortical Micro-circuits,” D. George and J. Hawkins, PLoS Computational Biology, vol. 5, 2009.

[26] “Beyond Boundaries: The New Neuroscience of Connecting Brains with Machines - and How it will Change Our Lives,” Miguel Nicolelis, Henry Holt & Co., 2011.

[27] “Networks of the Brain,” Olaf Sporns, MIT Press, 2011.